Evaluation of Local Interpretable Model-Agnostic Explanation and Shapley Additive Explanation for Chronic Heart Disease Detection

DOI:

https://doi.org/10.46604/peti.2023.10101Keywords:

SHAP, LIME, XGBoost, counterfactual explanationAbstract

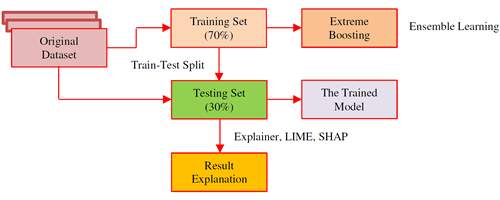

This study aims to investigate the effectiveness of local interpretable model-agnostic explanation (LIME) and Shapley additive explanation (SHAP) approaches for chronic heart disease detection. The efficiency of LIME and SHAP are evaluated by analyzing the diagnostic results of the XGBoost model and the stability and quality of counterfactual explanations. Firstly, 1025 heart disease samples are collected from the University of California Irvine. Then, the performance of LIME and SHAP is compared by using the XGBoost model with various measures, such as consistency and proximity. Finally, Python 3.7 programming language with Jupyter Notebook integrated development environment is used for simulation. The simulation result shows that the XGBoost model achieves 99.79% accuracy, indicating that the counterfactual explanation of the XGBoost model describes the smallest changes in the feature values for changing the diagnosis outcome to the predefined output.

References

Y. Meng, et al., “What Makes an Online Review More Helpful: An Interpretation Framework Using XGBoost and SHAP Values,” Journal of Theoretical and Applied Electronic Commerce Research, vol. 16, no. 3, pp. 466-490, June 2021.

S. M. Lundberg, et al., “From Local Explanations to Global Understanding with Explainable AI for Trees,” Nature Machine Intelligence, vol. 2, pp. 56-67, January 2020.

S. M. Lundberg, et al., “Explainable Machine-Learning Predictions for the Prevention of Hypoxemia During Surgery,” Nature Biomedical Engineering, vol. 2, no. 10, pp. 749-760, October 2018.

M. Toğaçar, et al., “Detection of COVID-19 Findings by the Local Interpretable Model-Agnostic Explanations Method of Types-Based Activations Extracted from CNNs,” Biomedical Signal Processing and Control, vol. 71, Article no. 103128, January 2022.

P. Xie, et al., “An Explainable Machine Learning Model for Predicting In-Hospital Amputation Rate of Patients with Diabetic Foot Ulcer,” International Wound Journal, vol. 19, no. 4, pp. 910-918, May 2022.

C. Rudin, et al., “Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges,” https://arxiv.org/pdf/2103.11251.pdf, July 2021.

A. Gramegna, et al., “SHAP and LIME: An Evaluation of Discriminative Power in Credit Risk,” Frontiers in Artificial Intelligence, vol. 4, Article no. 752558, 2021.

F. Cavaliere, et al., “Parkinson’s Disease Diagnosis: Towards Grammar-Based Explainable Artificial Intelligence,” IEEE Symposium on Computers and Communications, pp. 1-6, July 2020.

S. J. Sushma, et al., “An Improved Feature Selection Approach for Chronic Heart Disease Detection,” Bulletin of Electrical Engineering and Informatics, vol. 10, no. 6, pp. 3501-3506, December 2021.

R. Bhagwat, et al., “A Framework for Crop Disease Detection Using Feature Fusion Method,” International Journal of Engineering and Technology Innovation, vol. 11, no. 3, pp. 216-228, June 2021.

K. Wang, et al., “Interpretable Prediction of 3-Year All-Cause Mortality in Patients with Heart Failure Caused by Coronary Heart Disease Based on Machine Learning and SHAP,” Computers in Biology and Medicine, vol. 137, Article no. 104313, October 2021.

T. Suresh, et al., “A Hybrid Approach to Medical Decision-Making: Diagnosis of Heart Disease with Machine-Learning Model,” International Journal of Electrical and Computer Engineering, vol. 12, no. 2, pp. 1831-1838, April 2022.

R. Bharti, et al., “Prediction of Heart Disease Using a Combination of Machine Learning and Deep Learning,” Computational Intelligence and Neuroscience, vol. 2021, Article no. 8387680, 2021.

S. Rana, et al., “Slow Learner Prediction Using Multi-Variate Naïve Bayes Classification Algorithm,” International Journal of Engineering and Technology Innovation, vol. 7, no. 1, pp. 11-23, January 2017.

G. Vilone, et al., “Notions of Explainability and Evaluation Approaches for Explainable Artificial Intelligence,” Information Fusion, vol. 76, pp. 89-106, December 2021.

M. Toğaçar, et al., “Intelligent Skin Cancer Detection Applying Autoencoder, MobileNetV2 and Spiking Neural Networks Mesut,” Chaos, Solitons, and Fractals, vol. 144, Article no. 110714, March 2021.

A. F. Anderson, “Case Study: NHTSA’s Denial of Dr Raghavan’s Petition to Investigate Sudden Acceleration in Toyota Vehicles Fitted with Electronic Throttles,” IEEE Access, vol. 4, pp. 1417-1433, 2016.

T. A. Assegie, “Evaluation of the Shapley Additive Explanation (SHAP) Technique for Ensemble Learning Methods,” Proceedings of Engineering and Technology Innovation, vol. 21, pp. 20-26, April 2022.

Published

How to Cite

Issue

Section

License

Submission of a manuscript implies: that the work described has not been published before that it is not under consideration for publication elsewhere; that if and when the manuscript is accepted for publication. Authors can retain copyright of their article with no restrictions. Also, author can post the final, peer-reviewed manuscript version (postprint) to any repository or website.

Since Oct. 01, 2015, PETI will publish new articles with Creative Commons Attribution Non-Commercial License, under The Creative Commons Attribution Non-Commercial 4.0 International (CC BY-NC 4.0) License.

The Creative Commons Attribution Non-Commercial (CC-BY-NC) License permits use, distribution and reproduction in any medium, provided the original work is properly cited and is not used for commercial purposes