A Review of Advances in Bio-Inspired Visual Models Using Event-and Frame-Based Sensors

DOI:

https://doi.org/10.46604/aiti.2024.14121Keywords:

bio-inspired model, computer vision, event-based sensor, optic flow, dynamic vision sensor (DVS)Abstract

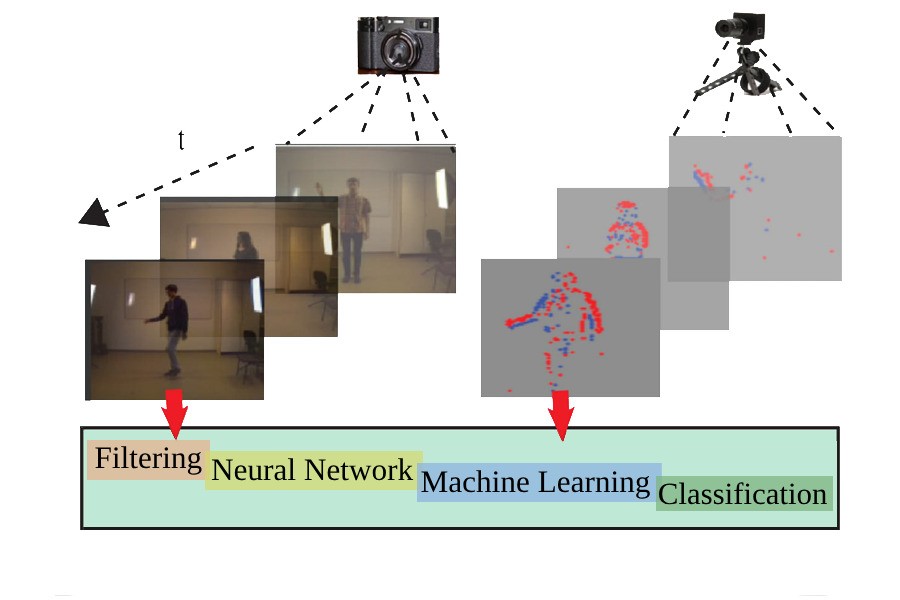

This paper reviews visual system models using event- and frame-based vision sensors. The event-based sensors mimic the retina by recording data only in response to changes in the visual field, thereby optimizing real-time processing and reducing redundancy. In contrast, frame-based sensors capture duplicate data, requiring more processing resources. This research develops a hybrid model that combines both sensor types to enhance efficiency and reduce latency. Through simulations and experiments, this approach addresses limitations in data integration and speed, offering improvements over existing methods. State-of-the-art systems are highlighted, particularly in sensor fusion and real-time processing, where dynamic vision sensor (DVS) technology demonstrates significant potential. The study also discusses current limitations, such as latency and integration challenges, and explores potential solutions that integrate biological and computer vision approaches to improve scene perception. These findings have important implications for vision systems, especially in robotics and autonomous applications that demand real-time processing.

References

D. Gehrig, H. Rebecq, G. Gallego, and D. Scaramuzza, “EKLT: Asynchronous Photometric Feature Tracking Using Events and Frames,” International Journal of Computer Vision, vol. 128, no. 3, pp. 601-618, 2020.

H. S. Leow and K. Nikolic, “Machine Vision Using Combined Frame-Based and Event-Based Vision Sensor,” Proceedings of IEEE International Symposium on Circuits and Systems, pp. 706-709, 2015.

M. Domínguez-Morales, J. P. Domínguez-Morales, Á. Jiménez-Fernández, A. Linares-Barranco, and G. Jiménez-Moreno, “Stereo Matching in Address-Event-Representation (AER) Bio-Inspired Binocular Systems in a Field-Programmable Gate Array (FPGA),” Electronics, vol. 8, no. 4, article no. 410, 2019.

L. Xia, Z. Ding, R. Zhao, J. Zhang, L. Ma, Z. Yu, et al., “Unsupervised Optical Flow Estimation with Dynamic Timing Representation for Spike Camera,” Proceedings of 37th International Conference on Neural Information Processing Systems, pp. 48070-48082, 2023.

B. Küçükoǧlu, B. Rueckauer, N. Ahmad, J. de Ruyter v. Stevenincks, U. Güçlü, and M. Van Gerven, “Optimization of Neuroprosthetic Vision via End-to-End Deep Reinforcement Learning,” International Journal of Neural Systems, vol. 32, no. 11, article no. 2250052, 2022.

D. Kong and Z. Fang, “A Review of Event-Based Vision Sensors and Their Applications,” Information and Control, vol. 50, no. 1, pp. 1-19, 2021. (in Chinese)

M. Nowak, A. Beninati, N. Douard, A. Puran, C. Barnes, A. Kerwick, et al., “Polarimetric Dynamic Vision Sensor P(DVS) Neural Network Architecture for Motion Classification,” Electronics Letters, vol. 57, no. 16, pp. 624-626, 2021.

F. A. Wichmann and R. Geirhos, “Are Deep Neural Networks Adequate Behavioral Models of Human Visual Perception?,” Annual Review of Vision Science, vol. 9, pp. 501-524, 2023.

J. A. Leñero-Bardallo, D. H. Bryn, and P. Häfliger, “Bio-Inspired Asynchronous Pixel Event Tri-Color Vision Sensor,” Proceedings of IEEE Biomedical Circuits and Systems Conference, pp. 253-256, 2011.

B. Wei, Y. Zhao, K. Hao, and L. Gao, “Visual Sensation and Perception Computational Models for Deep Learning: State of the Art, Challenges and Prospects,” https://arxiv.org/abs/2109.03391v1, 2021.

M. H. Tayarani-Najaran and M. Schmuker, “Event-Based Sensing and Signal Processing in the Visual, Auditory, and Olfactory Domain: A Review,” Frontiers in Neural Circuits, vol. 15, article no. 610446, May 2021.

R. Benosman, Sio-Hoi Ieng, C. Clercq, C. Bartolozzi, and M. Srinivasan “Asynchronous Frameless Event-Based Optical Flow,” Neural Networks, vol. 27, pp. 32-37, 2012.

G. Gallego T. Delbrück, G. Orchard, C. Bartolozzi, B. Taba, A. Censi, et al., “Event-Based Vision: A Survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 1, pp. 154-180, 2022.

S. Tschechne, R. Sailer, and H. Neumann, “Bio-Inspired Optic Flow from Event-Based Neuromorphic Sensor Input,” Artificial Neural Networks in Pattern Recognition, pp. 171-182, 2014.

L. I. Abdul-Kreem and H. K. Abdul-Ameer, “Object Tracking Using Motion Flow Projection for Pan-Tilt Configuration,” International Journal of Electrical and Computer Engineering, vol. 10, no. 5, pp. 4687-4694, 2020.

R. W. Fleming, “Visual Perception of Materials and Their Properties,” Vision Research, vol. 94, pp. 62-75, 2014.

L. I. Abdul-Kreem, “Computational Architecture of a Visual Model for Biological Motions Segregation,” Network: Computation in Neural Systems, vol. 30, no. 1-4, pp. 58-78, 2019.

M. J. Dominguez-Morales, A. Jimenez-Fernandez, G. Jimenez-Moreno, C. Conde, E. Cabello, and A. Linares-Barranco, “Bio-Inspired Stereo Vision Calibration for Dynamic Vision Sensors,” IEEE Access, vol. 7, pp. 138415-138425, 2019.

J. D. Blair, K. M. Gaynor, M. S. Palmer, and K. E. Marshall, “A Gentle Introduction to Computer Vision-Based Specimen Classification in Ecological Datasets,” Journal of Animal Ecology, vol. 93, no. 2, pp. 147-158, 2024.

K. Luma Issa Abdul-Kreem, “Depth Estimation and Shape Reconstruction of a 2D Image Using N.N. and Bézier Surface Interpolation,” Iraqi Journal of Computers, Communications, Control, and Systems Engineering, vol. 17, no. 1, pp. 24-32, 2017.

S. A. Baby, B. Vinod, C. Chinni, and K. Mitra, “Dynamic Vision Sensors for Human Activity Recognition,” Proceedings of 4th IAPR Asian Conference on Pattern Recognition, pp. 316-321, 2017.

N. V. K. Medathati, H. Neumann, G. S. Masson, and P. Kornprobst, “Bio-Inspired Computer Vision: Towards a Synergistic Approach of Artificial and Biological Vision,” Computer Vision and Image Understanding, vol. 150, pp. 1-30, 2016.

M. Yang, S. Chii. Liu, and T. Delbruck, “A Dynamic Vision Sensor with 1% Temporal Contrast Sensitivity and In-Pixel Asynchronous Delta Modulator for Event Encoding,” IEEE Journal of Solid-State Circuits, vol. 50, no. 9, pp. 2149-2160, 2015.

Y. Liu, R. Fan, J. Guo, H. Ni, and M. U. M. Bhutta, “In-Sensor Visual Perception and Inference,” Intelligent Computing, vol. 2, article no. 0043, 2023.

L. I. Abdul-Kreem, “Motion Estimations of Hand Movement Based on a Leap Motion Controller,” IEEE Sensors Journal, vol. 24, no. 11, pp. 17856-17864, 2024.

J. Billino and K. S. Pilz, “Motion Perception as a Model for Perceptual Aging,” Journal of Vision, vol. 19, no. 4, pp. 1-28, 2019.

L. I. Abdul-Kreem and H. Neumann, “Estimating Visual Motion Using an Event-Based Artificial Retina,” Communications in Computer and Information Science, pp. 396-415, 2016.

T. Brox, A. Bruhn, N. Papenberg, and J. Weickert, “High Accuracy Optical Flow Estimation Based on a Theory for Warping,” Lecture Notes in Computer Science, vol. 3024, pp. 25-36, 2004.

G. Farnebäck, “Very High Accuracy Velocity Estimation Using Orientation Tensors, Parametric Motion, and Simultaneous Segmentation of the Motion Field,” Proceedings of Eighth IEEE International Conference on Computer Vision, vol. 1, pp. 171-177, 2001.

G. Haessig, A. Cassidy, R. Alvarez, R. Benosman, and G. Orchard, “Spiking Optical Flow for Event-Based Sensors Using IBM’s TrueNorth Neurosynaptic System,” IEEE Transactions on Biomedical Circuits and Systems, vol. 12, no. 4, pp. 860-870, 2018.

L. M. Dang, K. Min, H. Wang, M. J. Piran, C. H. Lee, and H. Moon, “Sensor-Based and Vision-Based Human Activity Recognition: A Comprehensive Survey,” Pattern Recognition, vol. 108, article no. 107561, 2020.

R. Tapu, B. Mocanu, and T. Zaharia, “DEEP-SEE: Joint Object Detection, Tracking, and Recognition with Application to Visually Impaired Navigational Assistance,” Sensors, vol. 17, no. 11, article no. 2473, 2017.

P. Jiang, D. Ergu, F. Liu, C. Ying, and B. Ma, “A Review of Yolo Algorithm Developments,” Procedia Computer Science, vol. 199, pp. 1066-1073, 2022.

J. Deng, W. Dong, R. Socher, L. J. Li, K. Li, and F. F. Li, “ImageNet: A Large-Scale Hierarchical Image Database,” Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 248-255, 2009.

P. Thangavell and S. Karuppannan, “Dynamic Event Camera Object Detection and Classification Using Enhanced YOLO Deep Learning Architecture,” Optica Open Preprint, article no. 110762, 2023.

A. Wang, H. Chen, L. Liu, K. Chen, Z. Lin, J. Han, et al., “YOLOv10: Real-Time End-to-End Object Detection,” http://arxiv.org/abs/2405.14458, 2024.

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, et al., “Attention is All You need,” Proceedings of 31st Conference on Neural Information Processing Systems, pp. 1-11, 2017.

L. L. Richmond and J. M. Zacks, “Constructing Experience: Event Models from Perception to Action,” Trends in Cognitive Sciences, vol. 21, no. 12, pp. 962-980, 2017.

I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to Sequence Learning with Neural Networks,” Proceedings of 27th International Conference on Neural Information Processing Systems, vol. 2, pp. 3104-3112, 2014.

W. Weng, Y. Zhang, and Z. Xiong, “Event-Based Video Reconstruction Using Transformer,” Proceedings of IEEE /CVF International Conference on Computer Vision, pp. 2543-2552, 2021.

L. Yuan, Y. Chen, T. Wang, W. Yu, Y. Shi, Z. H. Jiang, et al., “Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet,” Proceedings of IEEE/CVF International Conference on Computer Vision, pp. 558-567, 2021.

H. Touvron, M. Cord, and H. Jégou, “Deit III: Revenge of the Vit,” https://arxiv.org/abs/2204.07118v1, 2022.

E. Mueggler, C. Forster, N. Baumli, G. Gallego, and D. Scaramuzza, “Lifetime Estimation of Events from Dynamic Vision Sensors,” Proceedings of IEEE International Conference on Robotics and Automation, pp. 4874-4881, 2015.

L. I. Abdul-Kreem and H. K. Abdul-Ameer, “Shadow Detection and Elimination for Robot and Machine Vision Applications,” Scientific Visualization, vol. 16, no. 2, pp. 11-22, 2024.

M. Al-Faris, J. Chiverton, D. Ndzi, and A. I. Ahmed, “A Review on Computer Vision-Based Methods for Human Action Recognition,” Journal of Imaging, vol. 6, no. 6, article no. 46, 2020.

R. Sun, D. Shi, Y. Zhang, R. Li, and R. Li, “Data-Driven Technology in Event-Based Vision,” Complexity, vol. 21, no. 1, article no.6689337, 2021.

G. Yang, “Application of Indoor Light Sensor Based on Monitoring Image Recognition in Basketball Sports Image Analysis and Simulation,” Optical and Quantum Electronics, vol. 56, article no. 315, 2023.

S. Dong, Z. Bi, Y. Tian, and T. Huang, “Spike Coding for Dynamic Vision Sensor in Intelligent Driving,” IEEE Internet of Things Journal, vol. 6, no. 1, pp. 60-71, 2019.

A. Z. Zhu, L. Yuan, K. Chaney, and K. Daniilidis, “EV-FlowNet: Self-Supervised Optical Flow Estimation for Event-Based Cameras,” Robotics: Science and Systems, vol. 4, 2018.

L. I. Abdul-Kreem and H. Neumann, “Bio-Inspired Model for Motion Estimation Using an Address-Event Representation,” Proceedings of 10th International Conference on Computer Vision Theory and Applications, vol. 3, pp. 335-346, 2015.

N. Salvatore and J. Fletcher, “Learned Event-Based Visual Perception for Improved Space Object Detection,” Proceedings of IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3301-3310, 2022.

L. A. Camunas-Mesa, T. Serrano-Gotarredona, S. H. Ieng, R. Benosman, and B. Linares-Barranco, “Event-Driven Stereo Visual Tracking Algorithm to Solve Object Occlusion,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 9, pp. 4223-4237, 2018.

I. A. Lungu, F. Corradi, and T. Delbruck, “Live Demonstration: Convolutional Neural Network Driven by Dynamic Vision Sensor Playing Roshambo,” Proceedings of IEEE International Symposium on Circuits and Systems, p. 1, 2017.

L. Camuñas-Mesa, C. Zamarreño-Ramos, A. Linares-Barranco, A. J. Acosta-Jiménez, T. Serrano-Gotarredona, and B. Linares-Barranco, “An Event-Driven Multi-Kernel Convolution Processor Module for Event-Driven Vision Sensors,” IEEE Journal of Solid-State Circuits, vol. 47, no. 2, pp. 504-517, 2012.

D. P. Moeys, F. Corradi, C. Li, S. A. Bamford, L. Longinotti, and F. F. Voigt, “A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications,” IEEE Transactions on Biomedical Circuits and Systems, vol. 12, no. 1, pp. 123-136, 2018.

R. Benosman, S. H. S. H. Ieng, P. Rogister and C. Posch, “Asynchronous Event-Based Hebbian Epipolar Geometry,” IEEE Transactions on Neural Networks, vol. 22, no. 11, pp. 1723-1734, 2011.

S. Dong, Z. Bi, Y. Tian, and T. Huang, “Spike Coding for Dynamic Vision Sensor in Intelligent Driving,” IEEE Internet of Things Journal, vol. 6, no. 1, pp. 60-71, 2019.

C. Brandli, R. Berner, M. Yang, S. C. Liu, and T. Delbruck, “A 240 × 180 130 dB 3 µs Latency Global Shutter Spatiotemporal Vision Sensor,” IEEE Journal of Solid-State Circuits, vol. 49, no. 10, pp. 2333-2341, 2014.

B. J. McReynolds, R. P. Graca, and T. Delbruck, “Experimental Methods to Predict Dynamic Vision Sensor Event Camera Performance,” Optical Engineering, vol. 61, no. 7, article no. 074103, 2022.

A. Yousefzadeh, G. Orchard, T. Serrano-Gotarredona, and B. Linares-Barranco, “Active Perception with Dynamic Vision Sensors. Minimum Saccades with Optimum Recognition,” IEEE Transactions on Biomedical Circuits and Systems, vol. 12, no. 4, pp. 927-939, 2018.

T. Delbruck, “Frame-Free Dynamic Digital Vision,” Proceedings of International Symposium on Secure-Life Electronics, Advanced Electronics for Quality Life and Society, pp. 21-26, 2008.

L. Steffen, D. Reichard, J. Weinland, J. Kaiser, A. Roennau, and R. Dillmann, “Neuromorphic Stereo Vision: A Survey of Bio-Inspired Sensors and Algorithms,” Frontiers in Neurorobotics, vol. 13, no. 28, pp. 1-20, 2019.

A. S. Parihar, D. Varshney, K. Pandya, and A. Aggarwal, “A Comprehensive Survey on Video Frame Interpolation Techniques,” The Visual Computer, vol. 38, pp. 295-319, 2022.

C. Posch, D. Matolin, and R. Wohlgenannt, “A Qvga 143 dB Dynamic Range Frame-Free PWM Image Sensor with Lossless Pixel-Level Video Compression and Time-Domain CDS,” IEEE Journal of Solid-State Circuits, vol. 46, no. 1, pp. 259-275, 2011.

A. Lakshmi, A. Chakraborty, and C. S. Thakur, “Neuromorphic Vision: From Sensors to Event-Based Algorithms,” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, vol. 9, no. 4, article no. 1310, 2019.

J. Behley, M. Garbade, A. Milioto, J. Quenzel, S. Behnke, C. Stachniss, et al., “A Dataset for Semantic Scene Understanding of LiDAR Sequences,” Proceedings of IEEE/CVF International Conference on Computer Vision, pp. 9296-9306, 2019.

Y. Cabon, N. Murray, and M. Humenberger, “Virtual KITTI 2,” http://arxiv.org/abs/2001.10773, 2020.

Z. Chen, V. Badrinarayanan, G. Drozdov, and A. Rabinovich, “Estimating Depth from RGB and Sparse Sensing,” Proceedings of 15th European Conference, pp. 176-192, 2018.

M. Jaritz, R. D.e Charette, E. Wirbel, X. Perrotton, and F. Nashashibi, “Sparse and Dense Data with Cnns: Depth Completion and Semantic Segmentation,” Proceedings of International Conference on 3D Vision, pp. 52-60, 2018.

J. A. Pérez-Carrasco, B. Zhao, C. Serrano, B. Acha, T. Serrano-Gotarredona, S. Chen, “Mapping from Frame-Driven to Frame-Free Event-Driven Vision Systems by Low-Rate Rate Coding and Coincidence Processing-Application to Feed Forward ConvNets,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 11, pp. 2706-2719, 2013.

J. Wu, Y. Wang, Z. Li, L. Lu, and Q. Li, “A Review of Computing with Spiking Neural Networks,” Computers, Materials & Continua, vol. 78, no. 3, pp. 2909-2939, 2024

J. Furmonas, J. Liobe, and V. Barzdenas, “Analytical Review of Event-Based Camera Depth Estimation Methods and Systems,” Sensors, vol. 22, no. 3, article no. 1201, 2022.

Y. Xu, S. Gao, Z. Li, R. Yang, and X. Miao, “Adaptive Hodgkin–Huxley Neuron for Retina‐Inspired Perception,” Advanced Intelligent Systems, vol. 4, no. 12, article no. 202200210, 2022.

J. Kim, Y. I. Choi, J. W. Sohn, S. P. Kim, and S. J. Jung, “Modeling Long-Term Spike Frequency Adaptation in SA-I Afferent Neurons Using an Izhikevich-Based Biological Neuron Model,” Experimental Neurobiology, vol. 32, no. 3, pp. 157-169, 2023.

M. Botvinick, J. X. Wang, W. Dabney, K. J. Miller, and Z. Kurth-Nelson, “Deep Reinforcement Learning and Its Neuroscientific Implications,” Neuron, vol. 107, no. 4, pp. 603-616, 2020.

H. Qiao, Y. X. Wu, S. L. Zhong, P. J. Yin, and J. H. Chen, “Brain-Inspired Intelligent Robotics: Theoretical Analysis and Systematic Application,” Machine Intelligence Research, vol. 20, no. 1, pp. 1-18, 2023.

Y. Li, J. Moreau, and J. Ibanez-Guzman, “Emergent Visual Sensors for Autonomous Vehicles,” IEEE Transactions on Intelligent Transportation Systems, vol. 24, no. 5, pp. 4716-4737, 2023.

F. B. Naeini, A. M. AlAli, R. Al-Husari, A. Rigi, M. K. Al-Sharman, and D. Makris, “A Novel Dynamic-Vision-Based Approach for Tactile Sensing Applications,” IEEE Transactions on Instrumentation and Measurement, vol. 69, no. 5, pp. 1881-1893, 2020.

A. Censi, J. Strubel, C. Brandli, T. Delbruck, and D. Scaramuzza, “Low-Latency Localization by Active LED Markers Tracking Using a Dynamic Vision Sensor,” Proceedings of IEEE International Conference on Intelligent Robotics and Systems, pp. 891-898, 2013.

A. Andreopoulos, H. J. Kashyap, T. K. Nayak, A. Amir, and M. D. Flickner, “A Low Power, High Throughput, Fully Event-Based Stereo System,” Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7532-7542, 2018.

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Aya Zuhair Salim, Luma Issa Abdul-Kareem

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

Submission of a manuscript implies: that the work described has not been published before that it is not under consideration for publication elsewhere; that if and when the manuscript is accepted for publication. Authors can retain copyright in their articles with no restrictions. is accepted for publication. Authors can retain copyright of their article with no restrictions.

Since Jan. 01, 2019, AITI will publish new articles with Creative Commons Attribution Non-Commercial License, under The Creative Commons Attribution Non-Commercial 4.0 International (CC BY-NC 4.0) License.

The Creative Commons Attribution Non-Commercial (CC-BY-NC) License permits use, distribution and reproduction in any medium, provided the original work is properly cited and is not used for commercial purposes.