Evaluation of the Shapley Additive Explanation Technique for Ensemble Learning Methods

DOI:

https://doi.org/10.46604/peti.2022.9025Keywords:

model explanation, random forest, ensemble method, interpretabilityAbstract

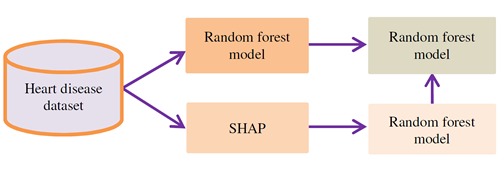

This study aims to explore the effectiveness of the Shapley additive explanation (SHAP) technique in developing a transparent, interpretable, and explainable ensemble method for heart disease diagnosis using random forest algorithms. Firstly, the features with high impact on the heart disease prediction are selected by SHAP using 1025 heart disease datasets, obtained from a publicly available Kaggle data repository. After that, the features which have the greatest influence on the heart disease prediction are used to develop an interpretable ensemble learning model to automate the heart disease diagnosis by employing the SHAP technique. Finally, the performance of the developed model is evaluated. The SHAP values are used to obtain better performance of heart disease diagnosis. The experimental result shows that 100% prediction accuracy is achieved with the developed model. In addition, the experiment shows that age, chest pain, and maximum heart rate have positive impact on the prediction outcome.

References

S. Liu, X. Wang, M. Liu, and J. Zhu, “Towards Better Analysis of Machine Learning Models: A Visual Analytics Perspective,” Visual Informatics, vol. 1, no. 1, pp. 48-56, March 2017.

K. Aas, M. Jullum, and A. Løland, “Explaining Individual Predictions When Features Are Dependent: More Accurate Approximations to Shapley Values,” Artificial Intelligence, vol. 298, Article no. 103502, September 2021.

A. Chatzimparmpas, R. M. Martins, I. Jusufi, and A. Kerren, “A Survey of Surveys on the Use of Visualization for Interpreting Machine-Learning Models,” Information Visualization, vol. 19, no. 3, pp. 207-233, July 2020.

J. Zhou, A. H. Gandomi, F. Chen, and A. Holzinger, “Evaluating the Quality of Machine Learning Explanations: A Survey on Methods and Metrics,” Electronics, vol. 10, no. 5, Article no. 593, March 2021.

P. Linardatos, V. Papastefanopoulos, and S. Kotsiantis, “Explainable AI: A Review of Machine Learning Interpretability Methods,” Entropy, vol. 23, no. 1, Article no. 18, January 2021.

H. S. Yan, M. C. Tsai, and M. H. Hsu, “An Experimental Study of the Effects of Cam Speeds on Cam-Follower Systems,” Mechanism and Machine Theory, vol. 31, no. 4, pp. 397-412, May 1996.

D. Farrugia, C. Zerafa, T. Cini, B. Kuasney, and K. Livori, “A Real-Time Prescriptive Solution for Explainable Cyber-Fraud Detection within the iGaming Industry,” SN Computer Science, vol. 2, no. 3, Article no. 215, May 2021.

K. Futagami, Y. Fukazawa, N. Kapoor, and T. Kito, “Pairwise Acquisition Prediction with SHAP Value Interpretation,” The Journal of Finance and Data Science, vol. 7, pp. 22- 44, November 2021.

M. Chaibi, E. M. Benghoulam, L. Tarik, M. Berrada, and A. E. Hmaidi, “An Interpretable Machine Learning Model for Daily Global Solar Radiation Prediction,” Energies, vol. 14, no. 21, Article no. 7367, November 2021.

P Csókaa, F. Illés, and T. Solymosi, “On the Shapley Value of Liability Games,” European Journal of Operational Research, in press.

C. M. Viana, M. Santos, D. Freire, P. Abrantes, and J. Rocha, “Evaluation of the Factors Explaining the Use of Agricultural Land: A Machine Learning and Model-Agnostic Approach,” Ecological Indicators, vol. 131, Article no. 108200, November 2021.

S. N. Payrovnaziri, Z. Chen, P. Rengifo-Moreno, T. Miller, J. Bian, J. H. Chen, et al., “Explainable Artificial Intelligence Models Using Real-World Electronic Health Record Data: A Systematic Scoping Review,” Journal of the American Medical Informatics Association, vol. 27, no. 7, pp. 1173-1185, 2020.

K. Dissanayake and M. G. M. Johar, “Comparative Study on Heart Disease Prediction Using Feature Selection Techniques on Classification Algorithms,” Applied Computational Intelligence and Soft Computing, vol. 2021, Article no. 5581806, 2021.

Published

How to Cite

Issue

Section

License

Submission of a manuscript implies: that the work described has not been published before that it is not under consideration for publication elsewhere; that if and when the manuscript is accepted for publication. Authors can retain copyright of their article with no restrictions. Also, author can post the final, peer-reviewed manuscript version (postprint) to any repository or website.

Since Oct. 01, 2015, PETI will publish new articles with Creative Commons Attribution Non-Commercial License, under The Creative Commons Attribution Non-Commercial 4.0 International (CC BY-NC 4.0) License.

The Creative Commons Attribution Non-Commercial (CC-BY-NC) License permits use, distribution and reproduction in any medium, provided the original work is properly cited and is not used for commercial purposes