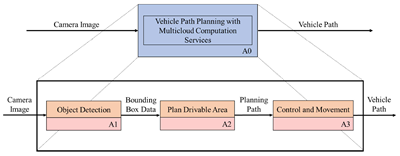

Vehicle Path Planning with Multicloud Computation Services

DOI:

https://doi.org/10.46604/aiti.2021.7192Keywords:

computer vision, scene recognition, cloud computing, object detectionAbstract

With the development of artificial intelligence, public cloud service platforms have begun to provide common pretrained object recognition models for public use. In this study, a dynamic vehicle path-planning system is developed, which uses several general pretrained cloud models to detect obstacles and calculate the navigation area. The Euclidean distance and the inequality based on the detected marker box data are used for vehicle path planning. Experimental results show that the proposed method can effectively identify the driving area and plan a safe route. The proposed method integrates the bounding box information provided by multiple cloud object detection services to detect navigable areas and plan routes. The time required for cloud-based obstacle identification is 2 s per frame, and the time required for feasible area detection and action planning is 0.001 s per frame. In the experiments, the robot that uses the proposed navigation method can plan routes successfully.

References

P. H. Lin, C. Y. Lin, C. T. Hung, J. J. Chen, and J. M. Liang, “The Autonomous Shopping-Guide Robot in Cashier-Less Convenience Stores,” Proceedings of Engineering and Technology Innovation, vol. 14, pp. 9-15, January 2020.

Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-Based Learning Applied to Document Recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278-2324, November 1998.

A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Communications of the ACM, vol. 60, no. 6, pp. 84-90, June 2017.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” IEEE Conference on Computer Vision and Pattern Recognition, June 2016, pp. 770-778.

G. Huang, Z. Liu, L. V. D. Maaten, and K. Q. Weinberger, “Densely Connected Convolutional Networks,” IEEE Conference on Computer Vision and Pattern Recognition, July 2017, pp. 2261-2269.

C. Y. Wang, H. Y. M. Liao, Y. H. Wu, P. Y. Chen, J. W. Hsieh, and I. H. Yeh, “CSPNet: A New Backbone That Can Enhance Learning Capability of CNN,” IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, June 2020, pp. 1571-1580.

A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, et al., “MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications,” https://arxiv.org/pdf/1704.04861.pdf, April 17, 2017.

K. H. Shih, C. T. Chiu, J. A. Lin, and Y. Y. Bu, “Real-Time Object Detection with Reduced Region Proposal Network via Multi-Feature Concatenation,” IEEE Transactions on Neural Networks and Learning Systems, vol. 31, no. 6, pp. 2164-2173, June 2020.

S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 6, pp. 1137-1149, June 2017.

D. Feng, C. Haase-Schütz, L. Rosenbaum, H. Hertlein, C. Gläser, F. Timm, et al., “Deep Multi-Modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 3, pp. 1341-1360, March 2021.

W. He, Z. Huang, Z. Wei, C. Li, and B. Guo, “TF-YOLO: An Improved Incremental Network for Real-Time Object Detection,” Applied Sciences, vol. 9, no. 16, 3225, August 2019.

H. Law and J. Deng, “CornerNet: Detecting Objects as Paired Keypoints,” European Conference on Computer Vision, September 2018, pp. 734-750.

K. Duan, S. Bai, L. Xie, H. Qi, Q. Huang, and Q. Tian, “CenterNet: Keypoint Triplets for Object Detection,” IEEE/CVF International Conference on Computer Vision, October 2019, pp. 6569-6578.

Z. Tian, C. Shen, H. Chen, and T. He, “FCOS: Fully Convolutional One-Stage Object Detection,” IEEE/CVF International Conference on Computer Vision, October 2019, pp. 9626-9635.

M. Tan, R. Pang, and Q. V. Le “EfficientDet: Scalable and Efficient Object Detection,” IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2020, pp. 10778-10787.

J. Chen, W. Zhan, and M. Tomizuka, “Autonomous Driving Motion Planning with Constrained Iterative LQR,” IEEE Transactions on Intelligent Vehicles, vol. 4, no. 2, pp. 244-254, June 2019.

Y. Luo, P. Cai, A. Bera, D. Hsu, W. S. Lee, and D. Manocha, “PORCA: Modeling and Planning for Autonomous Driving Among Many Pedestrians,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3418-3425, October 2018.

R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A Versatile and Accurate Monocular SLAM System,” IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147-1163, May 2015.

D. K. Dewangan and S. P. Sahu, “Deep Learning-Based Speed Bump Detection Model for Intelligent Vehicle System Using Raspberry Pi,” IEEE Sensors Journal, vol. 21, no. 3, pp. 3570-3578, February 2021.

C. Shao, C. Zhang, Z. Fang, and G. Yang, “A Deep Learning-Based Semantic Filter for RANSAC-Based Fundamental Matrix Calculation and the ORB-SLAM System,” IEEE Access, vol. 8, pp. 3212-3223, 2019.

J. S. Sheu and C. Y. Han, “Combining Cloud Computing and Artificial Intelligence Scene Recognition in Real-time Environment Image Planning Walkable Area,” Advances in Technology Innovation, vol. 5, no. 1, pp. 10-17, December 2019.

T. Xu, S. Zhang, Z. Jiang, Z. Liu, and H. Cheng, “Collision Avoidance of High-Speed Obstacles for Mobile Robots via Maximum-Speed Aware Velocity Obstacle Method,” IEEE Access, vol. 8, pp.138493-138507, 2020.

Published

How to Cite

Issue

Section

License

Submission of a manuscript implies: that the work described has not been published before that it is not under consideration for publication elsewhere; that if and when the manuscript is accepted for publication. Authors can retain copyright in their articles with no restrictions. is accepted for publication. Authors can retain copyright of their article with no restrictions.

Since Jan. 01, 2019, AITI will publish new articles with Creative Commons Attribution Non-Commercial License, under The Creative Commons Attribution Non-Commercial 4.0 International (CC BY-NC 4.0) License.

The Creative Commons Attribution Non-Commercial (CC-BY-NC) License permits use, distribution and reproduction in any medium, provided the original work is properly cited and is not used for commercial purposes.