Optimized Feature Extraction for Precise Sign Gesture Recognition Using Self-improved Genetic Algorithm

Keywords:

gesture sign recognition, GA, SIGA, feed- forward neural networkAbstract

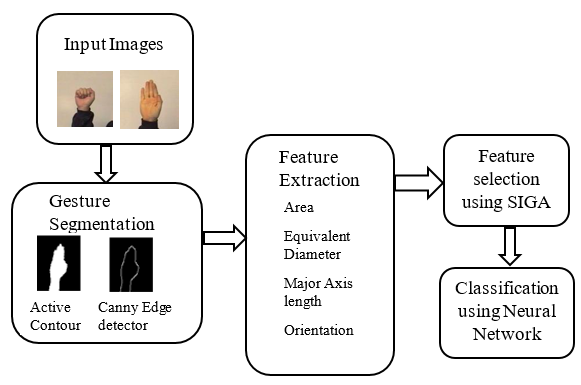

Over the past two years, gesture recognition has become the powerful communication source to the hearing-impaired society. Furthermore, it is supportive in creating interaction between the human and the computer. However, the intricacy against the gesture recognition arises when the environment is relatively complex. In this paper, recognition algorithm with feature selection based on Self-Improved Genetic Algorithm (SIGA) is proposed to promote proficient gesture recognition. Furthermore, the recognition process of this paper includes segmentation, feature extraction and feed- forward neural network classification. Subsequent to the gesture recognition experiment, the performance analysis of the proposed SIGA is compared with the conventional methods as reported in the literature along with standard Genetic Algorithm (GA). In addition, the effect of optimization and the feature sensitivity is also demonstrated. Thus, this method makes aggregate performance against the conventional algorithms.

References

K. Tripathi, N. Baranwal, and G. C. Nandi, “Continuous indian sign language gesture recognition and sentence formation,” Computer Science, vol. 54, pp. 523-531, 2015.

L. Wang, G. Liu, and H. Duan, “Dynamic and combined gestures recognition based on multi-feature fusion in a complex environment,” The Journal of China Universities of Posts and Telecommunications, vol. 22, no. 2, pp. 81-88, April 2015.

R. S. Rokade and D. D. Doye, “Spelled sign word recognition using key frame,” IET Image Processing, vol. 9, no. 5, pp. 381-388, April 2015.

T. Shanableh, K. Assaleh, and M. Al-Rousan, “Spatio-temporal feature-extraction techniques for isolated gesture recognition in arabic sign language,” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), vol. 37, no. 3, pp. 641-650, June 2007.

S. Z. Li, B. Yu, W. Wu, S. Z. Su, and R. R. Ji, “Feature learning based on SAE–PCA network for human gesture recognition in RGBD images,” Neuro computing, vol. 151, pp. 565-573, March 2015.

J. L. Rahejaa, S. Subramaniyamb, and A. Chaudhary, “Real-time hand gesture recognition in FPGA,” Optik - International Journal for Light and Electron Optics, vol. 127, no. 20, pp. 9719-9726, October 2016.

R. C. B. Madeo, S. M. Peres, and C. A. de Moraes Lima, “Gesture phase segmentation using support vector machines,” Expert Systems with Applications, vol. 56, pp. 100-115, September 2016.

T. H. S. Li, M. C. Kao, and P. H. Kuo, “Recognition system for home-service-related sign language using entropy-based K -means algorithm and ABC-based HMM,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 46, no. 1, pp. 150-162, January 2016.

M. R. Abid, E. M. Petriu, and E. Amjadian, “Dynamic sign language recognition for smart home interactive application using stochastic linear formal grammar,” IEEE Transactions on Instrumentation and Measurement, vol. 64, no. 3, pp. 596-605, March 2015.

G. Fang, W. Gao, and D. Zhao, “Large-vocabulary continuous sign language recognition based on transition-movement models,” IEEE Transactions on Systems, Man, and Cybernetics - Part A: Systems and Humans, vol. 37, no. 1, pp. 1-9, January 2007.

R. Xu, S. Zhou, and W. J. Li, “MEMS accelerometer based nonspecific-user hand gesture recognition,” IEEE Sensors Journal, vol. 12, no. 5, pp. 1166-1173, May 2012.

J. Gałka, M. Mąsior, M. Zaborski, and K. Barczewska, “Inertial motion sensing glove for sign language gesture acquisition and recognition,” IEEE Sensors Journal, vol. 16, no. 16, pp. 6310-6316, August 15, 2016.

G. Pradhan, B. Prabhakaran, and C. Li, “Hand-gesture computing for the hearing and speech impaired,” IEEE MultiMedia, vol. 15, no. 2, pp. 20-27, April-June 2008.

M. H. Yang, N. Ahuja, and M. Tabb, “Extraction of 2D motion trajectories and its application to hand gesture recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 24, no. 8, pp. 1061-1074, August 2002.

Y. Zhou, X. Chen, D. Zhao, H. Yao, and W. Gao, “Adaptive sign language recognition with exemplar extraction and MAP/IVFS,” IEEE Signal Processing Letters, vol. 17, no. 3, pp. 297-300, March 2010.

H. D. Yang, S. Sclaroff, and S. W. Lee, “Sign language spotting with a threshold model based on conditional random fields,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 31, no. 7, pp. 1264-1277, July 2009.

J. S. Kim, W. Jang, and Z. N. Bien, “A dynamic gesture recognition system for the Korean sign language (KSL),” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), vol. 26, no. 2, pp. 354-359, April 1996.

J. F. Lichtenauer, E. A. Hendriks, and M. J. T. Reinders, “Sign language recognition by combining statistical DTW and independent classification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no. 11, pp. 2040-2046, November 2008.

M. B. Waldron and S. W. Kim, “Increasing manual sign recognition vocabulary through relabeling,” Neural Networks, IEEE World Congress on Computational Intelligence, pp. 2885-2889, vol. 5, 1994.

P. K. Simpson, “Fuzzy min-max neural networks. I. Classification,” IEEE Transactions on Neural Networks, vol. 3, no. 5, pp. 776-786, September 1992.

S. S. Fels and G. E. Hinton, “Glove-Talk: a neural network interface between a data-glove and a speech synthesizer,” IEEE Transactions on Neural Networks, vol. 4, no. 1, pp. 2-8, January 1993.

A. Kumar, K. Thankachan, and M. M. Dominic, “Sign language recognition,” 2016 3rd International Conf. Recent Advances in Information Technology (RAIT), pp. 422-428, July 2016.

M. K. Bhuyan, M. K. Kar, and D. R. Neog, “Hand pose identification from monocular image for sign language recognition,” 2011 IEEE International Conf. Signal and Image Processing Applications (ICSIPA), pp. 378-383, 2011.

X. Chen, V. Lantz, K. Q. Wang, Z. Y. Zhao, X. Zhang, and J. H. Yang, “Feasibility of building robust surface electromyography-based hand gesture interfaces,” 2009 Annual International Conf. IEEE Engineering in Medicine and Biology Society, Minneapolis, pp. 2983-2986, 2009.

B. Hudgins, P. Parker, and R. N. Scott, “A new strategy for multifunction myoelectric control,” IEEE Transactions on Biomedical Engineering, vol. 40, no. 1, pp. 82-94, January 1993.

H. Cheng, Z. Dai, Z. Liu, and Y. Zhao, “An image-to-class dynamic time warping approach for both 3D static and trajectory hand gesture recognition,” Pattern Recognition, vol. 55, pp. 137-147, July 2016.

E. M. Taranta, A. N. Vargas, and J. J. LaViola Jr., “Stream lined and accurate gesture recognition with Penny Pincher,” Computers &Graphics, vol. 55, pp. 130-142, 2016.

C. Chansri and J. Srinonchat, “Hand gesture recognition for Thai sign language in complex background using fusion of depth and color video,” Procedia Computer Science, vol. 86, pp. 257-260, 2016.

R. Elakkiya and K. Selvamani, “An active learning framework for human hand sign gestures and handling movement epenthesis using enhanced level building,” Procedia Computer Science, vol. 48, pp. 606-611, 2015.

L. Raheja, M. Minhas, D. Prashanth, T. Shah, and A. Chaudhary, “Robust gesture recognition using Kinect: A comparison between DTWand HMM,” Optik - International Journal for Light and Electron Optics, vol. 126, pp. 1098-1104, June 2015.

E. P. Ijjina and K. M. Chalavadi, “Human action recognition using genetic algorithms and convolutional neural networks,” Pattern Recognition, vol. 59, pp. 199-212, November 2016.

M. Abdelaziz, “Distribution network reconfiguration using a genetic algorithm with varying population size,” Electric Power Systems Research, vol. 142, pp. 9-11, January 2017.

K. Bhatnagar and S. C. Gupta, “Investigating and modeling the effect of laser intensity and nonlinear regime of the fiber on the optical link,” Journal of Optical Communications, vol. 38, no. 3, pp. 1-13, August 2017.

Published

How to Cite

Issue

Section

License

Copyright (c) 2017 International Journal of Engineering and Technology Innovation

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

Copyright Notice

Submission of a manuscript implies: that the work described has not been published before that it is not under consideration for publication elsewhere; that if and when the manuscript is accepted for publication. Authors can retain copyright in their articles with no restrictions. Also, author can post the final, peer-reviewed manuscript version (postprint) to any repository or website.

Since Jan. 01, 2019, IJETI will publish new articles with Creative Commons Attribution Non-Commercial License, under Creative Commons Attribution Non-Commercial 4.0 International (CC BY-NC 4.0) License.

The Creative Commons Attribution Non-Commercial (CC-BY-NC) License permits use, distribution and reproduction in any medium, provided the original work is properly cited and is not used for commercial purposes.

.jpg)