An RGB-NIR Image Fusion Method for Improving Feature Matching

DOI:

https://doi.org/10.46604/ijeti.2020.5177Keywords:

image fusion, feature matching, near-infrared (NIR), detail boostingAbstract

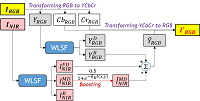

The quality of RGB images can be degraded by poor weather or lighting conditions. Thus, to make computer vision techniques work correctly, images need to be enhanced first. This paper proposes an RGB image enhancement method for improving feature matching which is a core step in most computer vision techniques. The proposed method decomposes near-infrared (NIR) image into fine detail, medium detail, and base images by using weighted least squares filters (WLSF) and boosts the medium detail image. Then, the fine and boosted medium detail images are combined, and the combined NIR detail image replaces the luminance detail image of an RGB image. Experiments demonstrates that the proposed method can effectively enhance RGB image; hence more stable image features are extracted. In addition, the method can minimize the loss of the useful visual (or optical) information of the original RGB image that can be used for other vision tasks.

References

C. Pohl and J. L. V. Genderen, Remote Sensing Image Fusion: A Practical Guide, CRC Press, 2016.

C. Pohl and J. L. V. Genderen, “Multisensor image fusion in remote sensing: concepts, methods and applications,” International Journal of Remote Sensing, vol. 19, pp. 823-854, March 1998.

A. E. Hayes, R. Montagna, and G. D. Finlayson “New applications of Spectral Edge image fusion,” Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XXII, vol. 9840, p. 984009, 2016.

M. Ehlers, Multi-image fusion in remote sensing: spatial enhancement vs. spectral characteristics preservation, Lecture Notes in Computer Science, 2008.

X. Jin, Q. Jiang, S. Yao, D. Zhou, R. Nie, J. Hai, and K. He, “A survey of infrared and visual image fusion methods,” Infrared Physics & Technology, vol. 85, pp. 478-501, July 2017.

G. He, J. Ji, D. Dong, J. Wang, and J. Fan, “Infrared and visible image fusion method by using hybrid representation learning,” IEEE Geoscience and Remote Sensing Letters, vol. 16, no. 11, pp. 1796-1800, April 2019.

J. Wang, B. Huang, H. K. Zhang, and P. Ma, “Sentinel-2A image fusion using a machine learning approach,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 12, pp. 9589-9601, December 2019.

L. Schaul, C. Fredembach, and S. Süsstrunk, “Color image dehazing using the near-infrared,” 2009 16th IEEE International Conference on Image Processing, IEEE Press, November 2009, pp. 1629-1623.

D. W. Jang and R. H. Park, “Colour image dehazing using near-infrared fusion,” IET Image Processing, vol. 11, no. 8, pp. 587-594, April 2017.

C. Fredembach, N. Barbuscia, and S. Süsstrunk, “Combining visible and near-infrared images for realistic skin smoothing,” Color and Imaging Conference, 17th Color and Imaging Conference Final Program and Proceedings, pp. 242-247, January 2009.

F. Durand and J. Dorsey, “Fast bilateral filtering for the display of high-dynamic-range images,” Proc. of the 29th annual conference on Computer graphics and interactive techniques, July 2002, pp. 257-266.

N. Salamati and S. Süsstrunk, “Material-based object segmentation using near-infrared information,” Color and Imaging Conference, 18th Color and Imaging Conference Final Program and Proceedings, vol. 2010, no. 1, pp. 196-201, January 2010.

H. Honda, R. Timofte, and L.V. Gool, “Make my day - high-fidelity color denoising with near-infrared,” Proc. of of the IEEE conference on computer vision and pattern recognition workshops, pp. 82-90, 2015.

H. Su and C. Jung, “Multi-spectral fusion and denoising of RGB and NIR images using multi-scale wavelet analysis,” 2018 24th International Conference on Pattern Recognition, IEEE Press, August 2018, pp. 1779-1784.

P. Li, S.-H. Lee, H. Y. Hsu, and J.-S. Park, “Nonlinear fusion of multispectral citrus fruit image data with information contents,” Sensors, vol. 17, no. 1, pp. 142, January 2017.

J. Jiang, X. Feng, F. Liu, Y. Xu, and H. Huang, “Multi-spectral RGB-NIR image classification using double-channel CNN,” IEEE Access, vol. 7, pp. 20607-20613, January 2019.

Y. Liu, L. Dong, Y. Ji, and W. Xu, “Infrared and visible image fusion through details preservation,” Sensors, vol. 19, 4556, November 2019.

K. Kurihara, D. Sugimura, and T. Hamamoto, “Adaptive fusion of RGB/NIR signals based on face/background cross-spectral analysis for heart rate estimation,” IEEE International Conference on Image Processing, September 2019, pp. 4534-4538.

H. Li and X. J. Wu, “DenseFuse: a fusion approach to infrared and visible images,” IEEE Transactions on Image Processing, vol. 28, no. 5, pp. 2614-2623, December 2018.

H. Li, X. J. Wu, and J. Kittler, “Infrared and visible image fusion using a deep learning framework,” Proc. of IEEE, International Conference on Pattern Recognition, August 2018, pp. 2705-2710.

V. Sharma, J. Y. Hardeberg, and S. George, “RGB-NIR image enhancement by fusing bilateral and weighted least squares filters,” Journal of Imaging Science & Technology, vol. 61, no. 4, pp. 1-9, August 2017.

Z. Farbman, R. Fattal, D. Lischinski, and R. Szeliski, “Edge-preserving decompositions for multi-scale tone and detail manipulation,” ACM Transactions on Graphics, vol. 27, pp. 2205-2221, August 2008.

M. Brown and S. Süsstrunk, “Multispectral SIFT for scene category recognition,” Proc. of CVPR, USA, 2011.

D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” International Journal of Computer Vision, vol. 60, no. 2, pp. 91-110, November 2004.

A. Vedaldi and B. Fulkerson, “VLFeat: An open and portable library of computer vision algorithms,” Proceedings of the 18th ACM international conference on Multimedia, October 2010, pp. 1469-1472.

R. Hartely and A. Zisserman, “Multiple View Geometry in Computer Vision,” Cambridge University Press, January 2003.

M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, vol. 24, no. 6, pp. 381-395, January 1981.

S. J. Chen, H. L. Shen, C. Li, and J. H. Xin, “Normalized total gradient: a new measure for multispectral image registration,” IEEE Transactions on Image Processing, vol. 27, no. 3, pp. 1297-1310, November 2017.

N. Ofir, S. Silberstein, H. Levi, D. Rozenbaum, Y. Keller, and S. D. Bar, Deep multi-spectral registration using invariant descriptor learning, 2018 25th IEEE International Conference on Image Processing, October 2018, pp. 1238-1242.

X. Zhao, J. Zhang, C. Yang, H. Song, Y. Shi, X. Zhou, D. Zhang, and G. Zhang, “Registration for optical multimodal remote sensing images based on FAST detection, window selection, and histogram specification,” Remote Sensing, vol. 10, no. 5, p. 663, April 2018.

R. Zhu, D. Yu, S. Ji, and M. Lu, “Matching RGB and infrared remote sensing images with densely-connected convolutional neural networks,” Remote Sensing, vol. 11, no. 23, p. 2836, November 2019.

Published

How to Cite

Issue

Section

License

Copyright Notice

Submission of a manuscript implies: that the work described has not been published before that it is not under consideration for publication elsewhere; that if and when the manuscript is accepted for publication. Authors can retain copyright in their articles with no restrictions. Also, author can post the final, peer-reviewed manuscript version (postprint) to any repository or website.

Since Jan. 01, 2019, IJETI will publish new articles with Creative Commons Attribution Non-Commercial License, under Creative Commons Attribution Non-Commercial 4.0 International (CC BY-NC 4.0) License.

The Creative Commons Attribution Non-Commercial (CC-BY-NC) License permits use, distribution and reproduction in any medium, provided the original work is properly cited and is not used for commercial purposes.

.jpg)